Playing around with the Chat GPT, Bard user interfaces has been fun but I end up using the Meta AI chat a lot more since the interface it uses seems to slide smoothly into my daily workflow. The MetaAI is great at this and is super useful for daily tasks and lookups. I also wanted to have the option of using the OpenAI API as the GPT models seems to give me the best results atleast for some of my more random queries. An unexpected benefit is the ability of using this on Whatsapp when I’m traveling internationally as the MetaAI on whatsapp seems to work only in the US market currently.

Enter Aristotle – a Whatsapp-based bot that plugs into the open AI model of your choosing to give you the result you want. Granted its as easy to flip to a browser on your phone and get the same info but I decided to replicate this functionality for kicks as this seems to be the form-factor of choice for me instead of loading a browser and logging into the interface etc.

This was also a good opportunity to test out the Assistant API and function calling. I also had a Ubuntu machine lying around at home that I wanted to put some use to and considering a small simple usecase like this – all it needs to do is stay awake and make the API calls when triggered. Credit to the openAI cookbook for a lot of the boiler plate.

Steps

The basic flow was something like this:

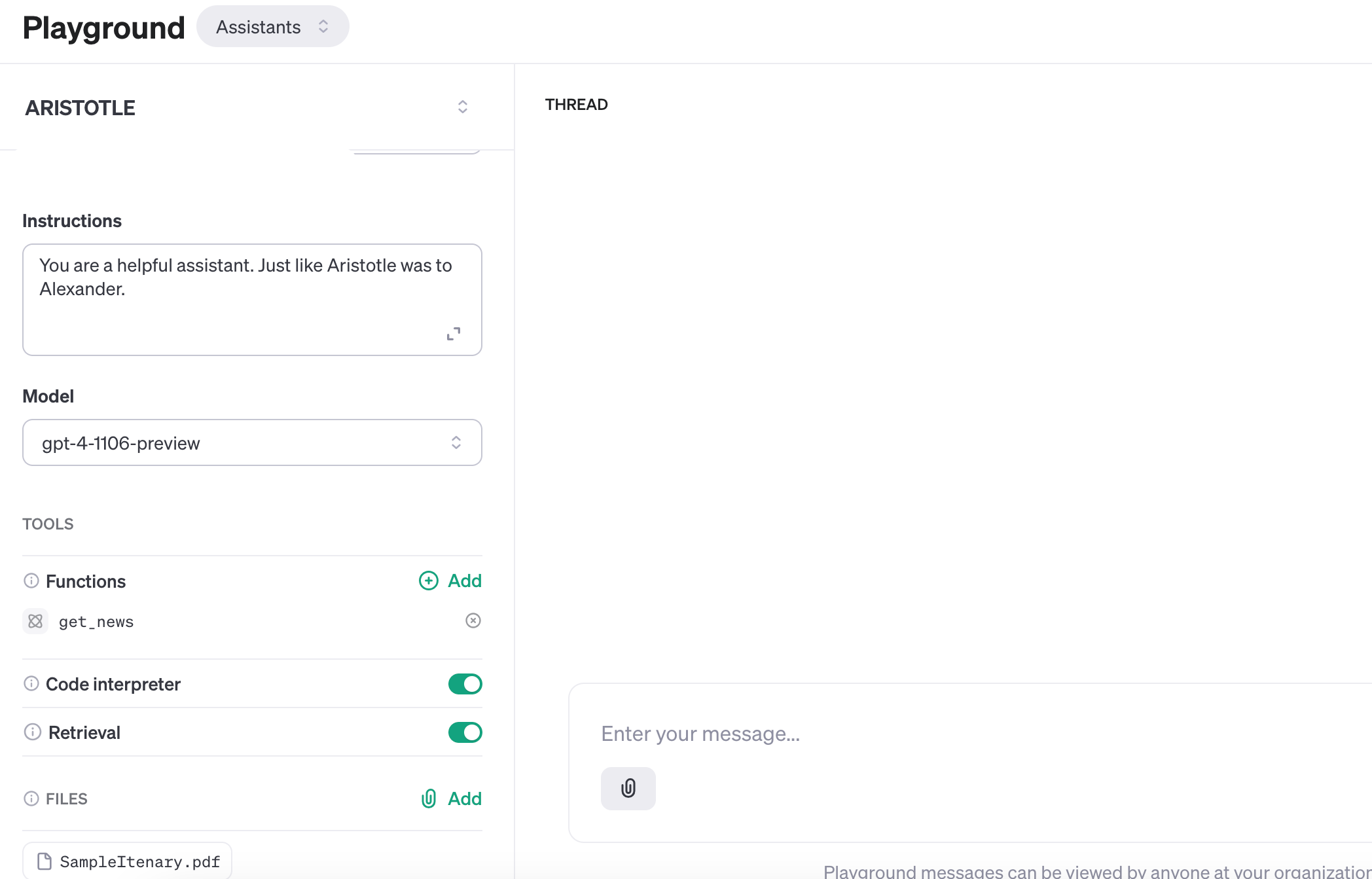

- Use the open AI playground and set up the Assistant. Create a get_news function with the variables you can reference in the code

- Grab your Open AI Key and the Assistant API ID

- Create a python function to grab the latest news from an API (I used newsapi.org)

- Use the Assistant API to write your script that will respond to your query and trigger the function if asked for news

- Wrap the function in a flask server that you can run on a server

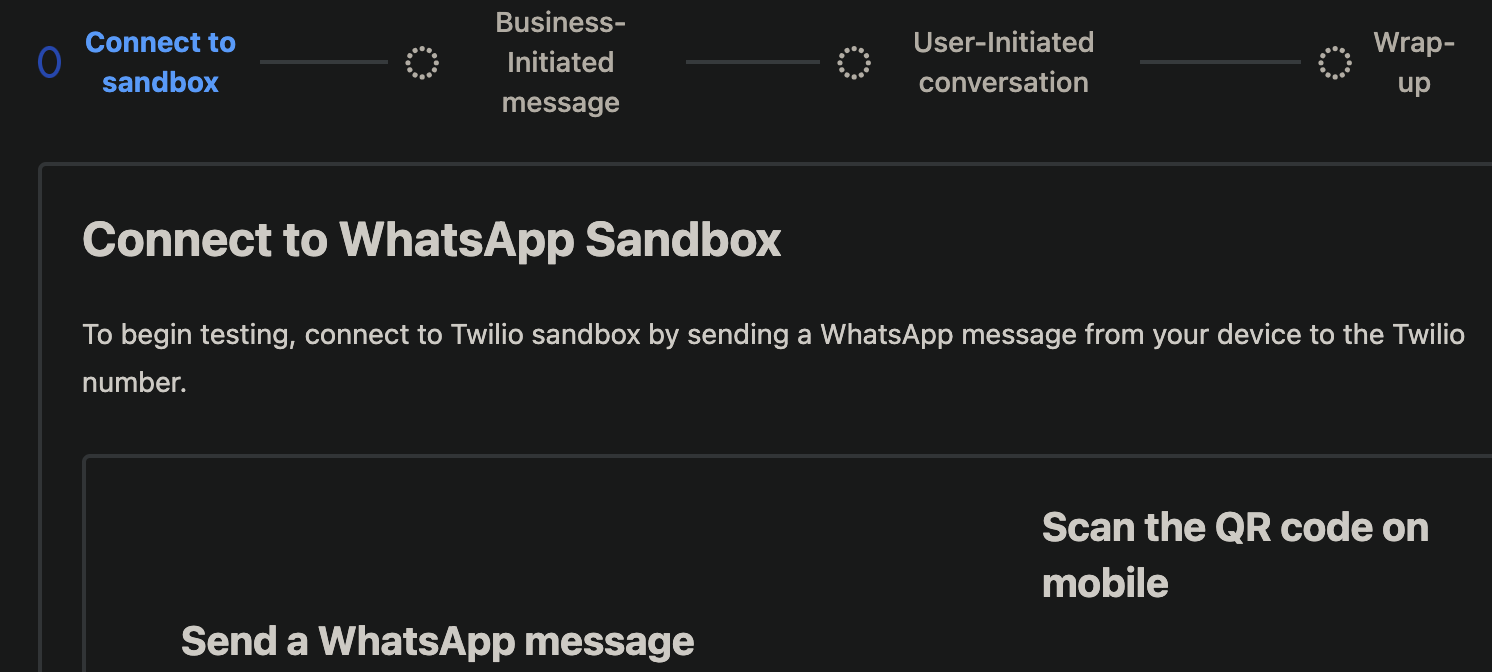

- Set up a Twilio sandbox to obtain a sandbox Whatsapp number and connect to it via your phone

- Start chatting!

Assistant

Setting up the playground was pretty easy with something like below.

Get News

# Function to fetch news articles

def fetch_news(skeyword):

url = f'https://newsapi.org/v2/everything?q={skeyword}&sortBy=popularity&pageSize=5&apiKey={news_api_key}'

response = requests.get(url)

if response.status_code == 200:

answer = response.json()

return answer

return "No response from the news API"This function etches news articles from the News API. It takes a single argument, `skeyword`, which is the search keyword used to find relevant news articles.

Set up the AI bot

chat_with_bot function is the core of the chatbot. It starts by getting the user’s message from a form submission. It then creates a new thread with OpenAI and appends the user’s message to the thread to keep the context.. The assistant is then run with the given instructions that give it the personality. The function then waits until the assistant’s run is no longer queued or in progress. If the run status is “requires_action”, it executes the required functions and submits their outputs back to the assistant. This process is repeated until the run is no longer queued, in progress, or requires action. After the run is complete, it retrieves the messages from the thread and adds them to the conversation history. Finally, it gets a response from ChatGPT and returns it. The comments in the code should be self-explanatory. I did notice the whatsapp messages returning empty if the token size was not limited to whatever length Twilio can handle. I finally settled on 250 tokens as that seemed to work for most of my queries.

def chat_with_bot():

# Obtain the request's user message

question = request.form.get('Body', '').lower()

print("user query ", question)

thread = openaiclient.beta.threads.create()

try:

# Insert user message into the thread

message = openaiclient.beta.threads.messages.create(

thread_id=thread.id,

role="user",

content=question

)

# Run the assistant

run = openaiclient.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant_id,

instructions=instructions

)

# Show assistant response

run = openaiclient.beta.threads.runs.retrieve(

thread_id=thread.id,

run_id=run.id

)

# Pause until it is not longer queued

count = 0

while (run.status == "queued" or run.status == "in_progress" and count < 5):

time.sleep(1)

run = openaiclient.beta.threads.runs.retrieve(

thread_id=thread.id,

run_id=run.id

)

count = count + 1

if run.status == "requires_action":

# Obtain the tool outputs by running the necessary functions

aristotle_output = run_functions(run.required_action)

aristotle_output = run_functions(run.required_action)

# Submit the outputs to the Assistant

run = openaiclient.beta.threads.runs.submit_aristotle_output(

thread_id=thread.id,

run_id=run.id,

aristotle_output=aristotle_output

)

# Wait until it is not queued

count = 0

while (run.status == "queued" or run.status == "in_progress" or run.status == "requires_action" and count < 5):

time.sleep(2)

run = openaiclient.beta.threads.runs.retrieve(

thread_id=thread.id,

run_id=run.id

)

count = count + 1

# After run is completed, fetch thread messages

messages = openaiclient.beta.threads.messages.list(

thread_id=thread.id

)

# TODO look for method call in data in messages and execute method

print(f"---------------------------------------------")

print(f"THREAD MESSAGES: {messages}")

# With every message, include user's message into the conversation history

for message in messages: # Loop through the paginated messages

system_message = {"role": "system",

"content": message.content[0].text.value}

# Append to the conversation history

conversation_history.append(system_message)

# Get the response from ChatGPT

answer = chat_with_bot(question, conversation_history, instructions)

print(f"---------------------------------------------")

print(f"ARISTOTLE: {answer}")

return str(answer)

except Exception as e:

answer = 'Sorry, I could not process that.'

print(f"An error occurred: {e}")run_functions

This function is used to execute any required actions that the assistant needs to perform. It loops through the tool calls in the required actions, gets the function name and arguments, and calls the corresponding Python function if it exists. The function’s output is then serialized to JSON and added to the list of tool outputs, which is returned at the end of the function.

The functions are wrapped in a flask server that runs 24 7 on a server I have running around at home. Considering the whopping number of active users (i.e. 1, server load is not really a concern.

TWILIO

https://console.twilio.com/ is super easy to setup a whatsapp connection. Twilio’s documentation and interface makes it super easy to set this up.

Once you have the whatsapp Sandbox set up and the free credits make it super easy to get start, the below code helps connect the application with twilio and whatsapp.

twilio_response = MessagingResponse()

reply = twilio_response.message()

answer = chat_with_bot()

numbers = [TWILIO_FROM_NUMBER, TWILIO_TO_NUMBER]

# add all the numbers to which you want to send a message in this list

for number in numbers:

message = client.messages.create(

body=answer,

from_='whatsapp:+'+TWILIO_FROM_NUMBER,

to='whatsapp:+'+number,

)

message = client.messages.create(

body=answer,

from_='whatsapp:+'+TWILIO_FROM_NUMBER,

to='whatsapp:+'+TWILIO_TO_NUMBER

)

print(message.sid)

So far, this has been useful to switch out models especially during travel. Some things I would love to do to add to this:

- Add more custom functions

- Speed up the backend by running on a beefier server

- Tune the application for faster responses

Code here: https://github.com/vishwanath79/aristotle-whatsapp